Member-only story

Fine-tuning a GPT — LoRA

This post explains the proven fine-tuning method LoRA, the abbreviation for “Low-Rank Adaptation of Large Language Models”. In this post, I will walk you through the LoRA technique, its architecture, and its advantages. I will present related background knowledge, such as the concepts of “low-rank” and “adaptation” to help your understanding. Similar to “Fining-tune a GPT — Prefix-tuning”, I cover a code example and will walk you through the code line by line. I will especially cover the GPU-consuming nature of fine-tuning a Large Language Model (LLM). Then I talk more about practical treatments which have been packaged as Python libraries “bitsandbytes” and “accelerate”. After completing this article, you will be able to explain:

- When do we still need fine-tuning?

- The challenges in adding more layers — Inference Latency

- What is the “rank” in the “low-rank” of LoRA?

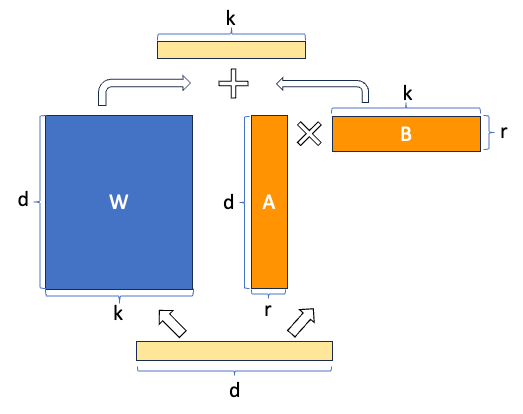

- The architecture of LoRA

- The advantages of LoRA

- Fine-tuning is still GPU-intensive

- The techniques to reduce the use of GPUs

- The code example

Why do we still need fine-tuning?

Pretrained Large Language Models (LLMs) are already trained with different types of data for various tasks such as text summarization, text generation, question and answering, etc. Why do we still need to fine-tune a LLM? The simple reason is to train the LLM to adapt to your domain data to do specific tasks. Just like a well-trained chef can do Italian cuisine or Chinese cuisine, the chef just needs to transfer his basic cooking knowledge and sharpen his skills to do other kinds of cuisines. The term “transfer learning” and “fine-tuning” are sometimes used interchangeably in NLP (for example, “Parameter-Efficient Transfer Learning for NLP” by Neil Houlsby et al. (2019)). With the arrival of LLMs, the term “fine-tuning” is used even more. (If you like to understand more about image learning, you can take a look at “Transfer Learning for Image Classification — (1) All Start Here” or the book “Transfer learning for image classification — with Python examples”.)

The challenges in adding more layers — Inference Latency